|

S2ENGINE HD

1.4.6

Official manual about S2Engine HD editor and programming

|

|

S2ENGINE HD

1.4.6

Official manual about S2Engine HD editor and programming

|

Just like Physics system, AIsystem is implemeted in a separate module. AISystem has its own scene representation composed by what we call AIObjects.

There are various types of objects, some are explicitly derived from SceneObject, some are implicitly managed by AI subsystem:

Areas (see AIArea), Points (see AIPoint) and Obstacles are objects needed by the navigation system for computing paths or choosing targets to reach.

Agents are all the characters controlled by AI (NPCs), i.e. objects moving through paths.

Occluders are objects that occlude Agent sight.

A scene object can be either an occluder and an obstacle. To make Scene Objects being occluders or obstacles you have to set its "isObbstacle" and "isOccluder" params using Editor. When these param are set an AIObject is created by AI system and associated to the object.

Agents are the characters of the AI system. To make a Character Entity being an Agent you have to notify AI System that you want to create an agent and associate it to the character. You can do this using AICreateCharacter function. You can make an agent seeing and hearing all other AI objects by using AIEnableVision and AIEnableHearing. So if you want player character being visible to NPCs you have to make player character being an Agent. When you enable hearing and/or vision of an agent you can use AIObjectIsViewed and/or AIObjectIsheard to query if an AIObject is visible and/or audible by him.

Example

Agents movement can be driven by using steering behaviours functions. You can also make Agents deciding about what place to reach by using tactical functions.

The basic feature of S2Engine HD AI engine is Navigation system. During game simulation NPCs must be able to know how to reach a given place in the level map. Navigation system lets NPCs to know what is the path to follow for going from a start to an end position: this feature is generically called "path-finding". To make this possible the game scene must be enriched of what we call AI helpers, i.e. objects that help Agents to acquire knowledge about the environmet around them. For example points that label a certain place, or connected points indicating a certain way to go, or areas delimiting the interiors of buildings.

Basically Navigation system performs a two-level path-finding. At first level there is a terrain based path-finding system and second level there is a waypoint based path-finding system. To perfom terrain path-finding the engine need auxiliary data together with those already contained into the terrain. A terrain is an open space without obstacles or walls, so find a path on a terrain is equal to trace a straight line from 2 points. But if I place a building? or a big and complex obstacle? If I place more than one building? How to avoid them or how to find a way among them?

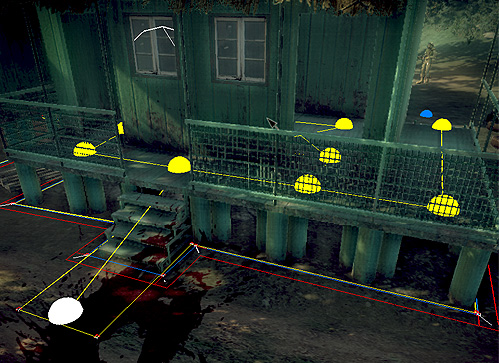

First Navigation System need you to specify, on the terrain, what is the perimeter of the buildings, i.e. what is the perimeter of what we call ForbiddenArea. As its name say, a ForbiddenArea is an area that NPCs cannot directly enter, so when he is close to that area he tends to avoid it (see also Steering behaviours), and when computing a path ForbiddenArea is taken into account by the path-finding algorithm. But What about I want the NPC to enter the building and reaching a point inside it?

In this case we need to use the Waypoint based path-finding. Waypoints are points connected each other that form a wide variety of possible paths, navigation system choose the best path to follow basing on the start end end points. When NPC needs to enter, for example, a building and navigate inside it you must specifiy an area containing waypoints (the WaypointArea) delimiting the building, just as the ForbiddenArea. But If NPC avoids the building due to ForbiddenArea, how he could enter inside the WaypointArea, i.e. inside the building? You have to specify one or more Waypoint (those near the Entry /Exit of the building)and mark them as "Entry" point. Of course the entry waypoint must be outside the ForbiddenArea but inside the waypointArea.

In the image yellow line is the waypoint area while red line is the forbidden area.

To use Path-finding by object script you have to call the AIFindPath function. After Path-find computation the AI system sends a message to the caller object: In the case of success it sends a "pathFound" message, otherwise it sends a "PathNotFound" message.

Example

For more information see ForbiddenArea, WaypointArea.

For more information see Waypoint, Coverpoint, Patrolpoint.

While Nagivation system is needed to make NPCs knowing how to reach a given point, Tactical system is needed to make NPCs how to decide what is the best point to reach in a given situation. For example if NPC weapon ammo is low it is necessary for him to decide what is the best point for reloading weapon, for example the nearest point covered from player attack. To do this AI System uses AIpoints. These points indicates strategic positions (just like a covering place) and are inserted into the scene by level designers. The 2 main point types are:

They are also called Tactical points. Tactical points can be lebelled with an ID that can be used to distinguish them when using the searching functions (described below). In some cases points to reach are automatically fopund by the system, this is the case of some cover points and allowed points. Some cover points can be point besides objects marked as Obstacles, in this case the system doesn't need designers place directly the point but it can automatically compute it, in this case you can use AIGetNearestCoverPoint or AIGetFurthestCoverPoint functions. Allowed point is any point outside a forbidden area, in this case the system computes it automatically by checking various positions inside a range around the agent, they can be computed using AIGetNearestAllowedPoint function.

There are several functions you can use to make actor deciding what to do:

Without doubts the magic of AI system lies inside the steering behaviours engine. As the name said steering behaviours are set of pre-defined behaviours controlling the actor movements. Movement of an actor is composed by two parts:

The two movements are indipendent so an agent can move in a direction while facing a target towards another direction (like, for example, during a strafing). There are steering behaviours that act on linear velocity (position changing) and others that act on angular velocity (orientation changing). Usually to move towards a direction you need a target point, i.e. a point to reach, while when rotating in a direction you need a target point to face. In a similar way to set actor movements you need to specify a target point to reach and a target point to face. You can obtain this by using the following functions:

Using these functions you tell steering behaviour system what are the targets it must follow during movements computation. Just like animations steering behaviours can be blended together on a weight basis forming more complex behaviours. Greater is the weight of a particular behaviour greater is its influence into the resulting behaviour. So After specifying the targets you can set the behaviours weights to tell steering system what are the behaviours it must take into consideration during movement computation. NOTE that The movements are computed in a transparent manner: you have no direct acces to actors movement (just like you haven't direct access to rigid bodies position / orientation), you have only to specify what point is to be reached and what behaviours you want actor uses.

To set the weight of a behaviour you have to use the AISetSteeringWeight function. The following are behaviours you can set:

For example if you want an actor reaching a particular point while facing another point you could write:

Agent movements are updated by AISystem using steering behaviours.

When new velocities (angular and linear) are computed the AISystem sends a message named "Motion" to the entity associated to the AIAgent. This message has the following format:

name: Motionparams: Usually this message is processed to obtain the direction of the movement and the facing vector for using them when updating animation and/or physical character motion.